Published: 2023-02-01

As Artificial Intelligence (AI) and digital media technologies permeate different sectors of human activity, pertinent questions are being raised about how these can address problems in healthcare. For example, how can AI be utilised to track and predict health behaviours and attitudes? How can computerised systems be developed to understand, ascertain, and improve health decision-making? How can people accurately decipher fact from falsehood amidst abundant algorithmically-generated health information in digital media spaces? These are among the questions driving the research of several faculty members in the School of Communication.

Human-AI Interactions

Sai Wang, a Research Assistant Professor in the Department of Interactive Media (IMD), has been investigating public perceptions and understanding of AI’s involvement in communication process. Smart technologies have redefined the interaction between humans and computers. For instance, voice assistants like Alexa, Siri, Bixby, and Microsoft’s Cortana can interpret and respond to human speech, emulating basic human to human conversation.

Wang contends that smart technologies with their own agency create a power dynamic that may be perceived as a threat to human freedom. She explores this possibility in her co-authored research on ‘Human-Internet of Things (IoT) Interaction’, published in Frontiers in Psychology. Her research examines how the social role and the technical capacities of an IoT agent wield persuasive influences on users.

In an experiment investigating the psychological tension in human-IoT interactions, Wang and her co-authors observe that when IoTs have a larger scope of control (e.g., smart homes), IoTs that perform more subservient roles, such as servants, assistants, or helpers, often elicit a more positive persuasive effect on users than IoTs that take on companion roles. She recommends that IoT developers “design a thoroughly user-centric interface that provide users with more power to control connected devices”.

|

Dr Sai Wang, Research Assistant Professor, Department of Interactive Media |

Another issue is the efficacy of AI technologies incorporated into journalistic practice. Xinzhi Zhang’s work speaks to this problem. An Assistant Professor in IMD, Zhang is interested in how news chatbots – a conversational software application that imitates human dialogue – are transforming journalism. For instance, news chatbots can interact with news audience by providing information, answering questions, assisting in audience subscription, giving recommendations, and performing other services that would have required people to people interaction.

In an article in Digital Journalism, Zhang and his co-authors evaluate the performance of Messenger chatbots within news media organisations. They find that in many cases the chatbots do not really offer functional interactions or demonstrate effective audience engagement. The authors surmise that “although chatbots may not truly function as intelligent agents to interact with the audiences, they may be a starting point for the iteration of small newsrooms with limited resources and further improvement”.

Predicting Health Behaviour using AI

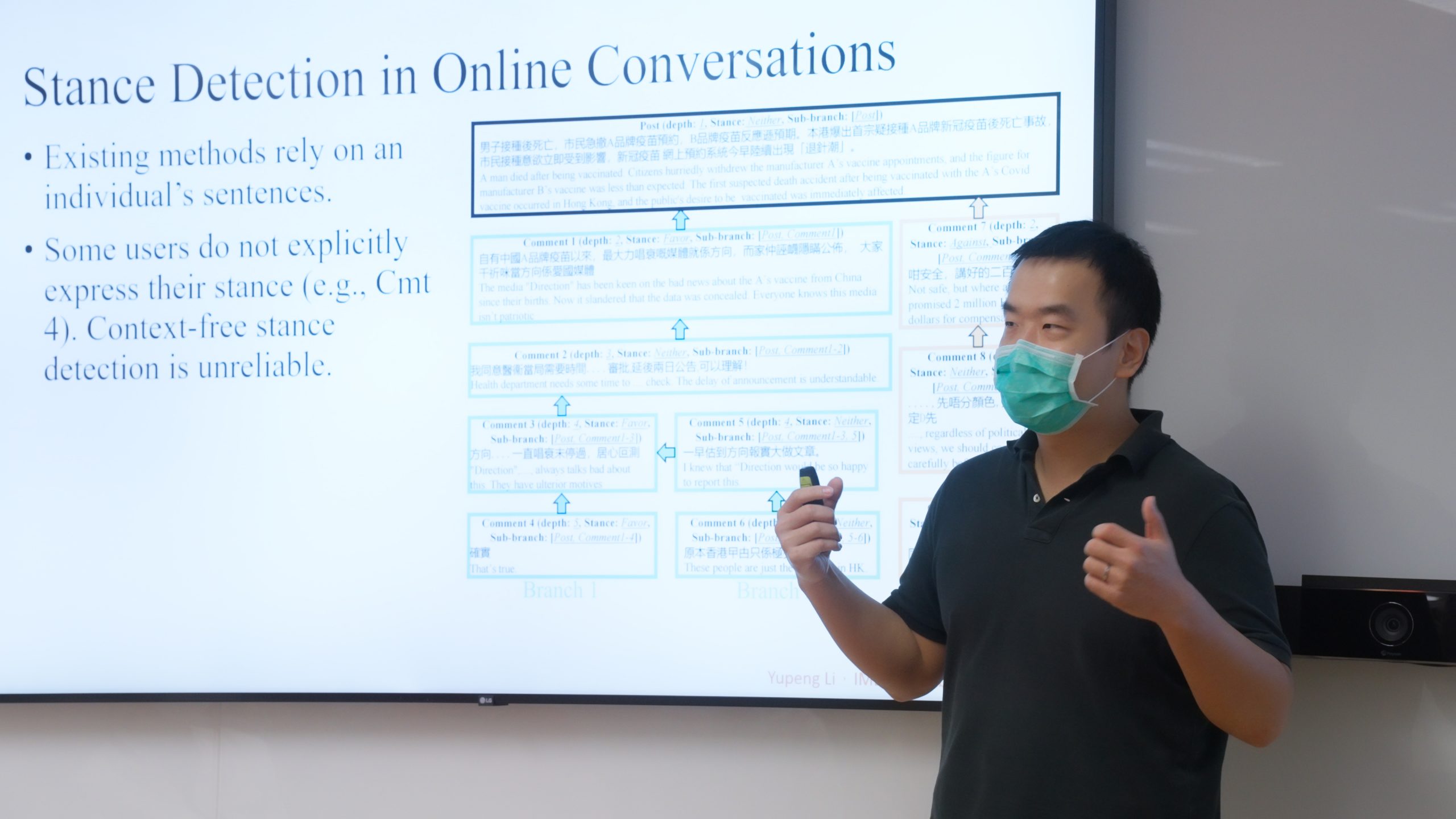

The World Health Organisation (WHO) has adjudged vaccine hesitancy to be one of the major threats to global health. Yupeng Li, another Assistant Professor in IMD, has been trying to understand the public’s COVID-19 vaccination stances on social media by using novel big data and AI techniques.

In two research projects, Li and his team developed social computing paradigms to mine public attitudes towards COVID-19 using large scale social media data. The first project focuses on detecting and understanding users’ positions on vaccination through their generated contents, for example, online conversations. His second study concentrates on users’ social interactions.

Li hopes to discover more possibilities through developing new (social) computing technology under the same framework he and his collaborators created in his recent projects. “We believe that our research can help policy makers make better use of the online social platform data to estimate and understand the real-time trend of the public opinions towards certain target, and then formulate relevant policies in Hong Kong”, he says.

|

Dr Yupeng Li, Assistant Professor, Department of Interactive Media |

Digital Media and Health ‘Infodemic’

In the Department of Communication Studies, Assistant Professor Stephanie Tsang has been exploring the problem of health misinformation. Her recent research examines how different types of misinformation impact readers’ evaluations of messages. She has also been trying to identify the mechanisms underlying audience perceptions of message inaccuracy and fakeness.

The issue of whether people are susceptible to inaccurate information because of cognitive laziness, personal bias, preexisting beliefs, or predisposed identities is a major question at the heart of audience psychology research. Tsang, who leads the BU Audience Research Lab (BUAR) and directs the HKBU Fact Check service, tackles this problem using COVID-19 misinformation on social media as a case study in her article published in Online Media and Global Communication.

At the height of the pandemic, social media platforms were littered with COVID-19 misinformation, from conspiracy theories, to fake news, false claims, and harmful health advice, all of which contributed to vaccine hesitancy across the world. In Hong Kong for instance, even though people seemed to be aware of the vaccines’ safety, “this knowledge did not seem to improve their willingness to take the vaccine”, notes Tsang. She argues that people’s ability to critically analyse social media misinformation is dependent on several psychological factors.

Tsang observes that “among all the factors taken into consideration, distrust of science and pre-existing attitudes toward vaccination played the largest role in predicting judgments about inaccuracy and fakeness”. Surprisingly, supporting evidence has little impact on such perceptions.

Celine Song, a Professor in the Department of Journalism, has been studying ways to stem the tide of COVID-19 misinformation on social media. Health agencies and organisations like WHO and Hong Kong’s Centre for Health Protection have designed and relentlessly disseminated a variety of guides to combat misinformation about the virus and vaccines. But making these messages as effective as possible is still a major challenge.

Song emphasises the need to identify strategies that can correct health misinformation and to design remedial messages that can effectively debunk misperceptions. Her article, co-authored with Sai Wang and published in Health Education Research, reports findings from experiments that test how evidence type and presentation mode of COVID-19 infographics impact people’s attitudes towards corrective messages on social media. The authors observe that people are more inclined to accept corrective messages that are presented with less statistical evidence and more text-plus-image presentation format. “The findings of this study provide important practical guidelines for public health organizations to improve the effectiveness of corrective information on social media platforms”, the study concludes.

These research projects offer insights into the transformative impact of AI and computerized systems on health communication and behavior. The studies further advance theoretical discussions of human-machine communication and how emerging technologies are integrated within the framework of human psychological behavior.

Related Publications

Kang, H., Kim, K. J., & Wang, S. (2022). Can the IoT persuade me? An investigation into power dynamics in human-IoT interaction. Frontiers in Psychology. 13.

Song, Y., Wang S., Qian Xu. (2022) Fighting misinformation on social media: effects of evidence type and presentation mode, Health Education Research, 37, (3), 185–198.

Tsang, S. (2022). Biased, not lazy: Assessing the effect of COVID-19 misinformation tactics on perceptions of inaccuracy and fakeness. Online Media and Global Communication. 1(3), 2022, 469-496

Zhang, X., Zhu, R., Chen, L., Zhang, Z., & Chen, M. (2022). News from Messenger? A Cross-National Comparative Study of News Media’s Audience Engagement Strategies via Facebook Messenger Chatbots. Digital Journalism, 1-20.